One problem with Named Entity Recognition is that they are domain-specific. The pipeline used by the trained pipelines typically include a tagger, a lemmatizer, a parser and an entity recognizer. The Doc is then processed in several different steps this is also referred to as the processing pipeline.

#Spacy part of speech tagger how to#

SpaCy has identified the POS for the word ‘play’ correctly in both the sentences. Basic Functionality Before we dive deeper into different spaCy functions, lets briefly see how to work with it. To learn more about the rules of Porter Stemming visit this link. The Porter stemmer works very well in many cases so we’ll use it to extract stems from the sentence. NLTK provides several famous stemmers like Lancaster, porter, and snowball. Since Spacy doesn’t have stemming we’ll use NLTK to perform stemming.

Typically lemmatization produces a meaningful base form compared to stemming. However, the difference between stemming and lemmatization is that stemming is rule-based where we’ll trim or append modifiers that indicate its root word while lemmatization is the process of reducing a word to its canonical form called a lemma. I’ve listed below the different statistical models in spaCy along with their specifications: encorewebsm: English multi-task CNN trained on OntoNotes.

Stemming and Lemmatisation are two different but very similar methods used to convert a word to its root or base form. These models enable spaCy to perform several NLP related tasks, such as part-of-speech tagging, named entity recognition, and dependency parsing. It also identifies the period which followed France denotes the end of a sentence and should be treated as a separate token. There are 1000 negative texts in the current corpus.As you can see from the result, the tokenizer identifies the word the U.K and U.S.A as a single entity instead of ‘U’, ‘.’ and ‘K’. These occurrences are scattered in 337 different documents. For example, if the lemma action occurs 691 times in the negative reviews collection.Most importantly, we can describe the quality/performance of the pattern retrieval with two important measures. We can summarize the pattern retrieval results as:

#Spacy part of speech tagger manual#

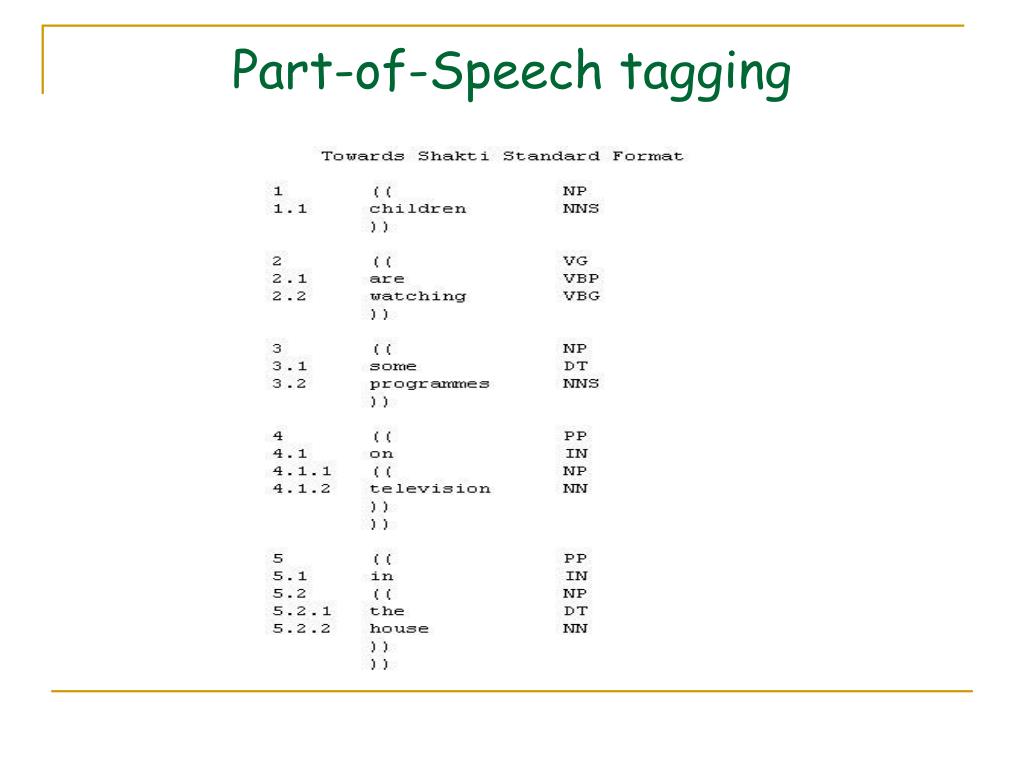

In the above manual annotation (Figure 5.3), phrases highlighted in green are NOT successfully identified by the current regex query, i.e., False Negatives.Of/adp the/det present/adj solemn/adj ceremony/noun Of/adp this/det distinguished/adj honor/noun In the regex result, the following returned tokens (rows highlighted in blue) are False Positives-the regular expression identified them as PP but in fact they were NOT PP according to the manual annotations.A comparison of the two results shows that: False Negatives: True patterns in the data but are not successfully identified by the system (cf. green in Figure 5.3).Īs shown in Figure 5.3, manual annotations have identified 21 PP’s from the text while the regular expression identified 20 tokens.False Positives: Patterns identified by the system (i.e., regular expression) but in fact they are not true patterns (cf. blue in Figure 5.3).12.3.1 Feature-Coocurrence Matrix ( fcm)įigure 5.3: Manual Annotation of English PP’s in 1793-Washington.12.3 Vector Space Model for Words (Self-Study).11.7.1 From Token-based to Turn-based Data Frame.11.5 BNC2014 for Socio-linguistic Variation.11.3 Process the Whole Directory of BNC2014 Sample.8.5 Distributional Information Needed for CA.7.8 Case Study 2: Word Frequency and Wordcloud.7.7 Case Study 1: Concordances with kwic().4.9.1 Cooccurrence Table and Observed Frequencies.4.2 Building a corpus from character vector.

0 kommentar(er)

0 kommentar(er)